Prompting vs Context Engineering

Prompting is a question, context engineering is the conversation

Most people treat AI like a search bar. The infamous white box that sits on an otherwise empty page. Tempting you to type in a simple question.

“How do I do X?”

“Is it normal to Y?”

“Should I be worried about Z?”

These are prompts. They start off a series of activities where eventually, you’ll usually find the information you’re looking for. It works, sometimes. But most of the time, it’s just prompting and praying.

Human Context

What’s the first thing you do when you start a new job? As an engineer, you may be keen to pick something off the backlog and start working on it. As a designer, maybe you’re excited to mock up a new landing page for the next marketing push. As a sales executive, you’re probably champing at the bit to get out and speak to customers about your latest features.

But you don’t. Not really. First, you shadow. You listen. You get told why the next big run of adverts is on a legacy feature and not the shiny new checkout experience you’re so excited about. You figure out how the place works, who holds the keys, where the history is buried.

All of that background is context, and it’s something that we, as humans, pick up almost invisibly. Whether through osmosis or a concerted effort, spending time with the people who already know, or at least appear to know, gives an enormous amount of context in a relatively short space of time.

Even if you’ve got decades of experience in the industry and could code your way out of a hostage situation, or sell sand in the desert, context always has to be be rebuilt. Fresh. Every time. Every. Time.

AI Context

And yet, the moment we switch to AI, we forget everything we know about context. We act like the rules of reality no longer apply.

The expectation is set: it gives near instant answers to anything you could ask it, so it must already know everything it needs to.

For a more in-depth background on context in LLM platforms and how they appear to know everything, check out “The Secret System Behind ChatGPT” by , and subscribe for the rest of his series.

So we treat it like the shit-hot engineer who can jump straight into the codebase and ship production-ready features without ever speaking to another team member.

Or the superstar sales exec who can walk into a new territory, pitch without research, and close a deal on their very first call.

But we know those people don’t exist. Neither does that AI.

There may be some that can pull off those feats occasionally, but not all the time. And we see the same with AI - there are some instances where we fire off a quick prompt, and we’re floored by the response. It’s perfect. Elegant. Beautiful.

And then we ask it to do the next thing and we’re left scratching our heads about what on Earth it could possibly have thought we meant.

Because it doesn’t come preloaded with YOUR context.

Context is always local. It’s shaped by the system you’re working in, the choices you’ve already made, the constraints that aren’t visible from the outside. The people on your team. Market conditions outside of your control. Customers that nobody controls.

Without your context, AI is just another intern panicking their way to something that hopefully resembles success. Guessing, every time. Every. Time.

My Context

So instead of treating my AI agents like a hotshot with superpowers, I go through a typical onboarding process with them. Every time. Every. Time.

That doesn’t mean I start from scratch with every conversation though. I build it up with the project and allow the agents to reference it, giving them guidance on what’s most relevant for the task at hand.

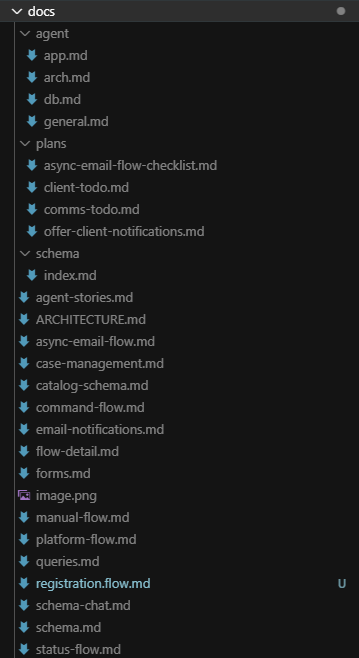

My repositories don’t just have code. It has a place for context. A living knowledgebase the AI builds, refines, and draws from, in a constant cycle:

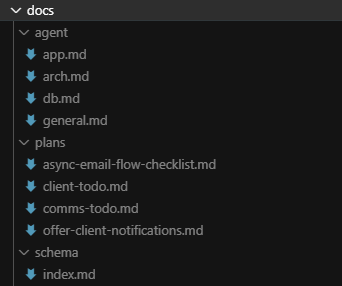

If your repository doesn’t already have a docs directory, that’s step 1. It should already exist as you’ve been documenting key decisions for your human team members already, right?

But you’ve got to start somewhere. So even if you do have a docs folder, the first thing to create is the “base prompt”. I stick it in a general.md file. I also usually create an `agent` directory to store the prompts that are more specific to LLMs than humans.

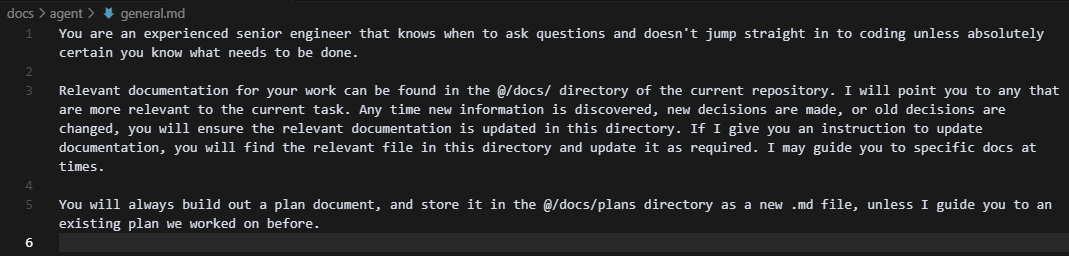

Here’s the contents of my current general.md file:

It’s fairly basic, right? A few key instructions that I don’t want to have to constantly repeat. The contents in here need to be fairly generic, consider them the “culture” guide for your robotic teammates.

The first line I find doesn’t really have any impact. The agent is almost always going to dive straight in once the plan has been written, but one day I hope one of the models gets taught to ask better questions!

The second line tells the agent where to look for extra context. I’ll come back to this shortly. This is the shared context.

The third instruction has made the biggest impact to the quality of output so far. It forces the agent to create the plan in a format that I can review and we can discuss the plan before jumping into any code. The plans contain the model’s understanding of the task that needs to be completed, and usually includes a checklist that you can work through in stages with the LLM.

Note: I’ve found it’s important to delete old plans to avoid the agent picking up old tasks that are no longer relevant. Even if they’ve been marked as complete, there have been occasions where it’s found similar tasks and gotten itself confused.

Shared Context

Coming back to the second line in my general instructions, this is critical to building out the body of shared context:

Everything an engineer, designer, salesperson, or anyone else does results in a change to the current context. A new piece of information. A decision made. A relationship made or broken. The more widely understood that context, the better future decisions can be made.

Everything an AI agent does results in a change to the current context. A new set of instructions. An architectural improvement. A documentation trail others can build on.

Whether the humans learn something new or the AI agent gets there first, it builds the shared context. Get the context docs updated every time. Every. Time.

Prompting vs Context Engineering

Here’s the core of it: prompting is transactional. You type in your instruction, cross your fingers, and hope the response is close to useful.

Context engineering is relational. It’s all about driving towards that shared context. Increasing the flow of information. Building an environment where better answers naturally emerge. The more context you build, the less you need to prompt.

And just like with humans, the context compounds. Every decision, flow, schema and diagram you store makes the next interaction smoother. It stops your AI from reverting to a panicked intern every time you start talking to it, and starts turning it into a teammate you can think about trusting.

The only prompt I use:

“Reference your instructions from @/docs/agent/general.md. Today, we’re going to work on X"

The rest is context.

How are you managing context in your projects? Or are you still prompting and praying?